Google SynthID Watermark AI Generated Text

In a significant move towards improving transparency in the use of AI-generated content, Google has made its watermarking technology, SynthID Text, generally available. This tool, designed to help developers and businesses detect AI-generated text, is now accessible through Hugging Face and Google’s newly updated Responsible GenAI Toolkit.

With the increasing use of generative AI models to create text, SynthID Text aims to address concerns over authenticity, helping users discern between human-created content and AI-generated text. As AI becomes more pervasive, ensuring content traceability is critical in maintaining trust in online information.

What Is SynthID Text?

SynthID Text is a watermarking technology developed by Google to help identify text created by generative AI models. It’s essentially a tool that “marks” AI-generated content so that it can later be detected. The tool is now freely available for developers and businesses, enabling them to embed and recognize watermarks in AI-generated text. According to a post on X (formerly Twitter), Google emphasized that the technology would be an important part of their ongoing efforts to ensure transparency in AI content creation.

FOLLOW OUR WHATSAPP CHANNEL FOR MORE RESOURCES

How Does SynthID Text Work?

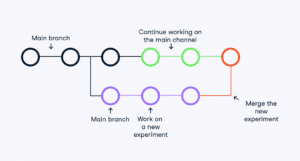

SynthID Text operates by embedding a pattern within the token distribution of AI-generated content. To understand this, it’s essential to grasp how text-generating AI models work.

- Tokenization: Text-generating models operate by predicting which token—a word or character—comes next in a sequence. These models process information token by token, assigning each possible token a probability score. This score represents the likelihood of a particular token appearing after the previous one.

- Watermark Embedding: SynthID Text modifies this probability distribution in subtle ways. It adjusts the likelihood of certain tokens being generated while preserving the overall quality and coherence of the output. This adjusted token pattern acts as a “watermark,” which can later be detected by comparing it to the expected pattern for watermarked and non-watermarked content.

This watermark is designed to persist through changes made to the text, such as cropping or paraphrasing, ensuring that the watermark remains detectable even if the content is slightly modified.

Limitations of SynthID Text

While SynthID Text is a powerful tool, Google acknowledges that it does have limitations. One major limitation is its reduced effectiveness with shorter pieces of text. The technology also struggles with content that has been heavily rewritten, translated, or used to respond to factual questions, such as “What is the capital of France?” or “Recite a poem by William Wordsworth.”

When generating factual answers, AI models have fewer opportunities to adjust token distribution without affecting the factual accuracy of the response. As a result, embedding a watermark in such text without compromising its accuracy becomes a challenge.

SynthID Text’s Integration with Google Gemini Models

Google has integrated SynthID Text into its Gemini models, which are a family of advanced AI models designed for natural language processing and text generation. This integration, which has been in place since spring, allows the watermarking tool to be seamlessly applied to text generated by Gemini models.

One of the key advantages of SynthID Text is that it does not affect the quality, accuracy, or speed of the generated content. Despite the added watermarking process, the AI-generated text remains indistinguishable from non-watermarked content in terms of readability and coherence. This makes it a valuable tool for developers and businesses who want to ensure traceability without compromising the user experience.

The Broader Context: AI Text Watermarking in the Industry

Google is not the only company working on AI text watermarking technologies. OpenAI, the organization behind models like GPT-3 and ChatGPT, has been researching watermarking methods for years. However, they have delayed releasing their watermarking tools due to technical and commercial challenges.

FOLLOW OUR WHATSAPP CHANNEL FOR MORE RESOURCES

The development of watermarking technology for AI-generated text is becoming increasingly important as AI tools become more widely used. If these technologies are widely adopted, they could help address some of the issues posed by “AI detectors” that falsely flag text written in a generic voice as AI-generated. These detectors are often inaccurate, and watermarking offers a more reliable method of identifying AI content.

The Legal Push for AI Transparency

The rise of AI-generated content is not just a technological issue—it’s becoming a regulatory concern as well. Governments around the world are beginning to take action to ensure transparency in AI-generated content. For instance, China has introduced mandatory watermarking for AI-generated content, and California is considering similar regulations.

The push for regulation reflects the growing urgency of the situation. A report by the European Union Law Enforcement Agency predicts that by 2026, as much as 90% of online content could be synthetically generated. This rapid increase in AI-generated content presents new challenges for law enforcement, particularly in dealing with disinformation, fraud, and other forms of online deception.

Currently, AI tools like language models and translators are responsible for a significant portion of online content. According to a study by Amazon Web Services (AWS), nearly 60% of all sentences on the internet may already be AI-generated, thanks in part to the widespread use of AI-powered translation tools.

The Future of AI Watermarking

As AI-generated content becomes more prevalent, watermarking technologies like SynthID Text will likely play a critical role in maintaining transparency and accountability. However, the widespread adoption of these technologies is still uncertain. While some companies may choose to implement watermarking to ensure transparency, others may not see the immediate benefits, especially if it involves modifying their AI models or changing the way they generate content.

The success of SynthID Text and other watermarking tools will depend on several factors, including industry standards and regulatory requirements. If governments around the world begin to mandate watermarking for AI-generated content, it could lead to more widespread adoption of these tools. Furthermore, the development of industry-wide standards for watermarking could help ensure that different tools and methods are compatible, making it easier to detect AI-generated content across different platforms.

The introduction of SynthID Text by Google is a significant step towards improving transparency in AI-generated content. As the use of AI tools continues to grow, technologies like SynthID Text will become increasingly important in ensuring that AI-generated content can be easily identified and traced back to its source. While there are still challenges to overcome, particularly in terms of effectiveness with shorter or factual text, SynthID Text offers a promising solution to one of the major concerns surrounding the rise of generative AI.

The future of AI-generated content will likely involve a combination of technological innovation and regulatory oversight. As more governments and companies recognize the importance of transparency in AI, tools like SynthID Text could become an essential part of the AI landscape.

FOLLOW OUR WHATSAPP CHANNEL FOR MORE RESOURCES

SHARE THIS POST